Building Blocks of Semantic Kernel

Hi Everyone! This post is continuation of a series about Semantic Kernel. Over the time, I will updated this page with links to individual posts :

Getting Started with Semantic Kernel (Part 1)

Getting Started with Semantic Kernel (Part 2)

This Post - Building Blocks of Semantic Kernel

Getting Started with Foundry Local & Semantic Kernel

Getting Started with Ollama & Semantic Kernel

Getting Started with LMStudio & Semantic Kernel

So far, we have covered the theoretical aspects of Gen AI and LLM. We will continue to explore the theoretical aspects of Semantic Kernel in this post.

Let me reiterate what I have said in Part 1 Semantic Kernel is a development kit.

As per wikipedia, A software development kit (SDK) is a collection of software development tools.

In the context of Semantic Kernel, the development kit provides a various frameworks and components that can be used to build AI applications.

Semantic Kernel Components

Semantic Kernel provides a set of components that can be used individually or in combination to build AI applications. These components include:

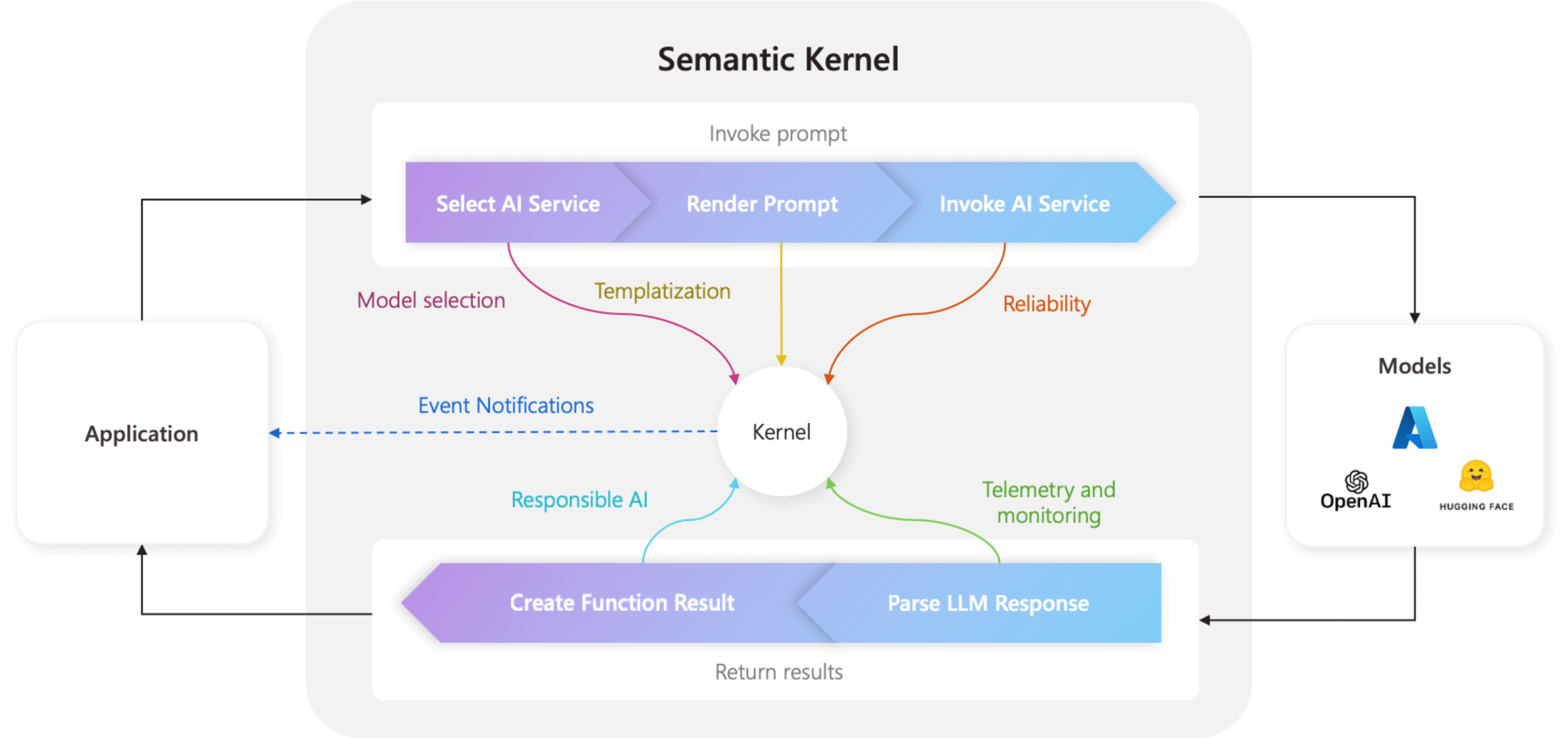

Kernel

Kernel is the core component of Semantic Kernel. It is basically a dependency container, which has two component Services and Plugins.

Let’s take a look at the two components of Kernel:

| Component | Description |

|---|---|

| Services | Just like any other Services in .NET project. It has Logging, Cache, HttpClient etc. capabilities. The only difference is that these Services also consists of AI specific services, such as ChatCompletion , Vector Store etc. services via connectors. |

| Plugins | These are components that are used to extend the functionality of LLM. We will explore this topic in more detail in future posts. |

Connectors

Connectors are the components that are used to connect Semantic Kernel with other external services. Yhere are two types of connectors:

| Connector | Description |

|---|---|

| AI Connectors | These connectors provide an abstraction layer that exposes multiple AI service types from different providers such as Azure OpenAI, Local LLMs etc. via a common interface. Supported services include Chat Completion, Text Generation, Embedding Generation, Text to Image, Image to Text, Text to Audio and Audio to Text. |

| Vector Store Connectors | These connectors provide an abstraction layer that exposes vector store from different providers such as Qdrant, Azure Search, Chroma etc. via a common interface. |

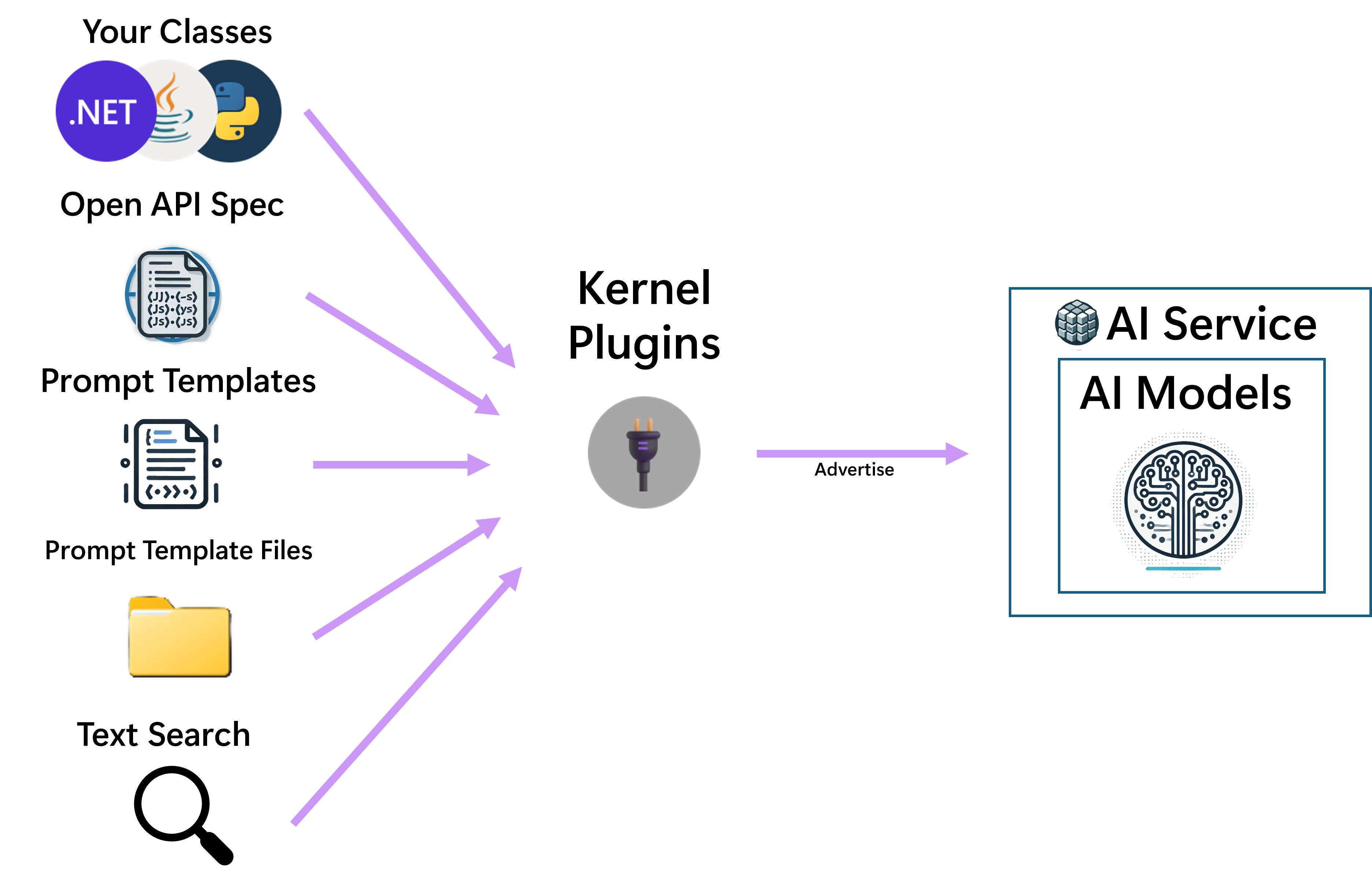

Functions and Plugins

These are components that are used to extend the functionality of LLM. Plugins are a collection of named functions that can be used to perform specific tasks. Functions are the individual units of work that can be executed by the Semantic Kernel. As mentioned earlier, Plugins can be registered with the kernel, which allows the kernel to use them in two ways:

- Advertise them to the chat completion AI, so that the AI can choose them for invocation.

- Make them available to be called from a template during template rendering.

Below diagram shows the different sources of plugins:

KernelFunction attribute is used to define a function. In general, it should have well defined description and input/output parameters, which should be understood by the AI.

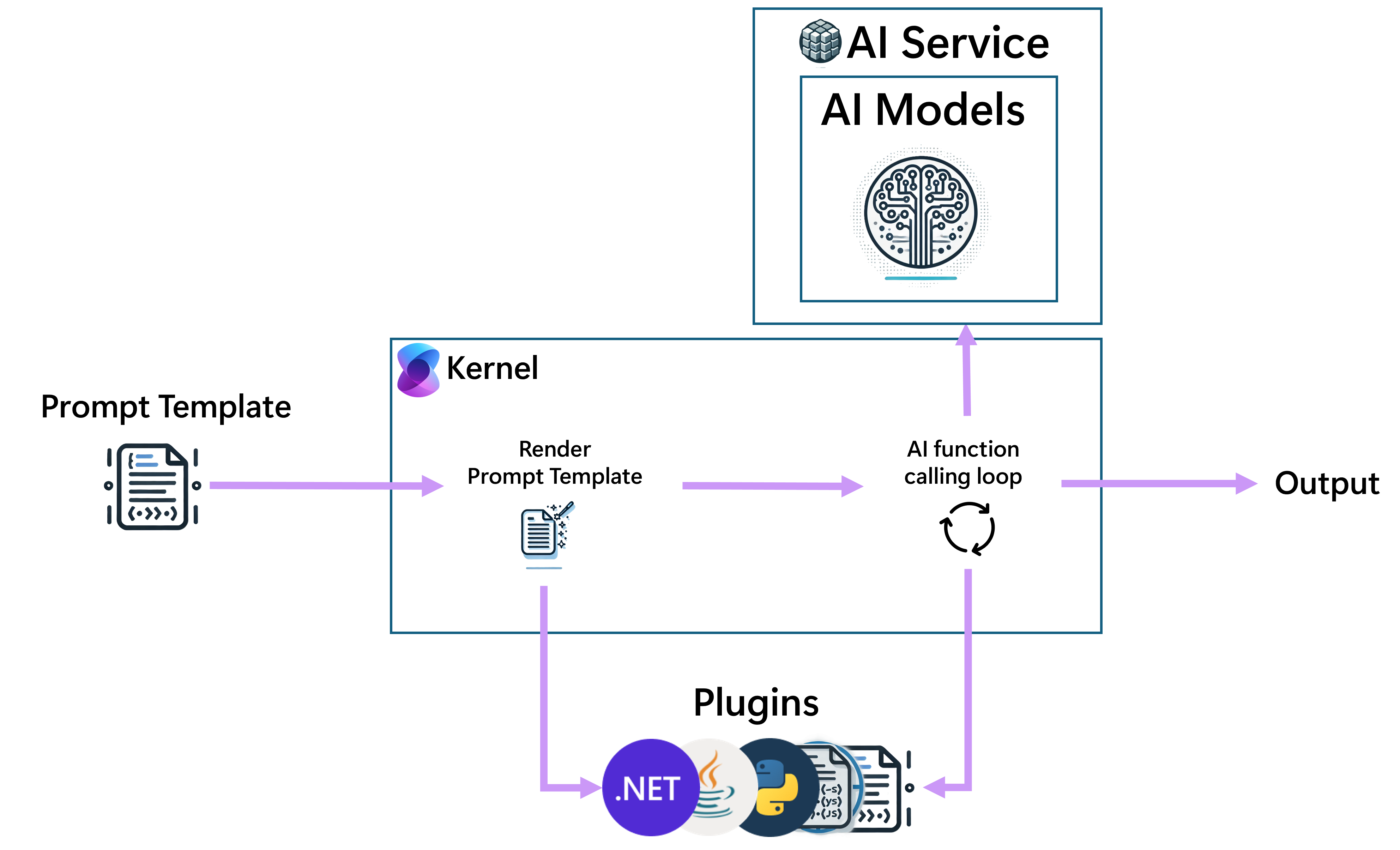

Prompt Templates

Prompt templates allow you to create instructions that can be used to guide the LLM in generating responses. In general, it gives the context and/or persona with the user input and function output, if any. See the following diagram for where prompt templates fit in the overall architecture of Semantic Kernel:

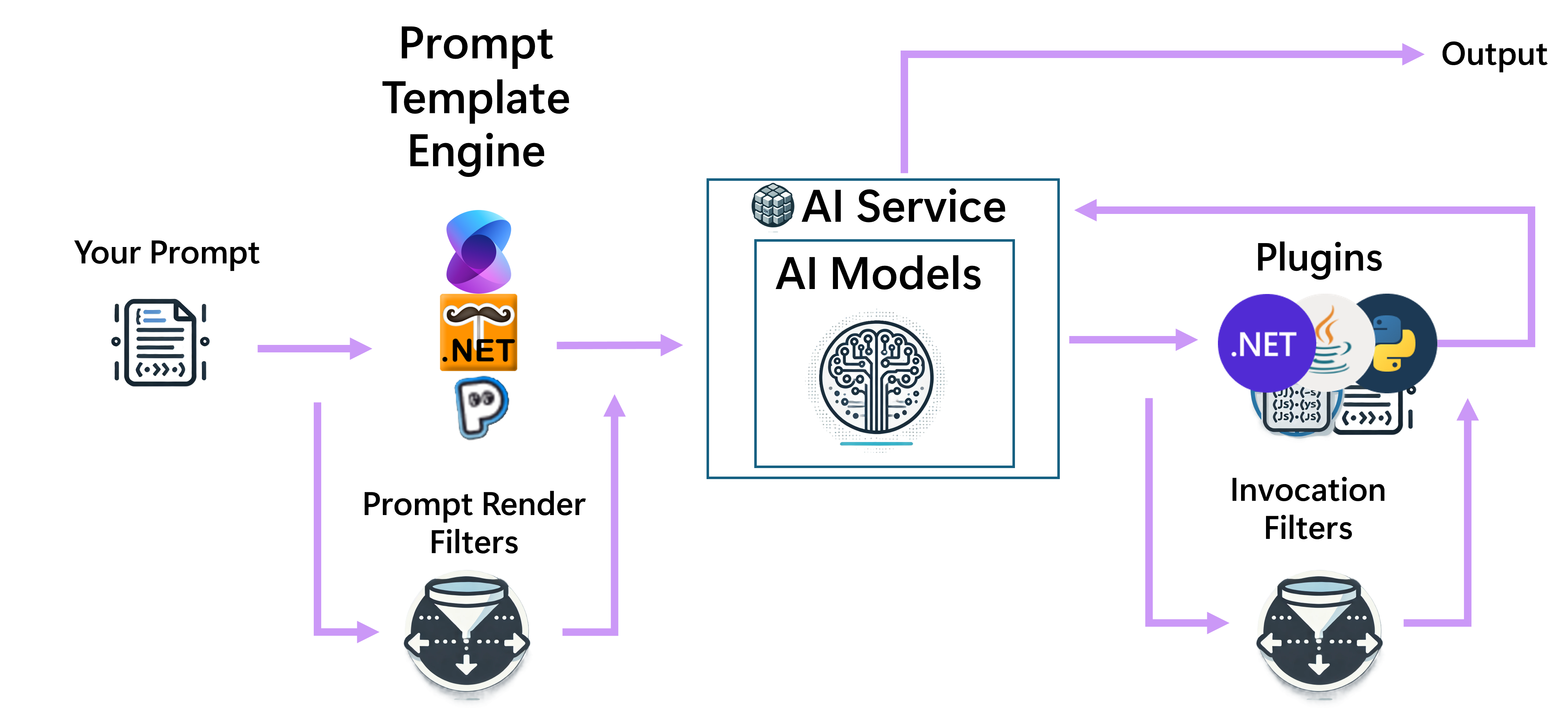

Filters

Filters are components which provides a way to take action before and after of function invocation and prompt rendering in the Semantic Kernel pipeline.

Please note, Prompt templates are always converted to KernelFunction before execution, both function and prompt filters will be invoked for a prompt template. Function filters are the outer filters and prompt filters are the inner filters.

See the following diagram for where filters fit in the overall architecture of Semantic Kernel:

Semantic Kernel Frameworks

Semantic Kernel provides a set of frameworks as well, which can be used together or independently to build more advanced applications.

Agent Framework

Agent Framework provides a way to create agents that can interact with each other and/or with the user to perform tasks autonomously or semi-autonomously.

We will explore this topic in more detail in future posts, however here is the official documentation.

Process Framework

Process Framework provides a way to define and manage business processes within the Semantic Kernel. It allows you to create complex workflows by chaining together multiple functions and components, utilizing an event-driven model to manage workflow execution.

We will also explore this topic in more detail in future posts, however here is the official documentation.

I know this is the third post, but we did not cover any code yet. Just because I wanted to make sure we are on the same page and understand the basic concepts of Semantic Kernel. In the next post, we will start with the code and build a simple application using Semantic Kernel.