Download Files From Azure Blob Storage Efficiently Using RecyclableMemoryStream

Recently, I was working on a project where we had to download multiple large files from Azure Blob Storage and process it further. When the application hits the production environment and started getting the actual files, we observed that memory consumption is getting very high. On top of that, the application was hosted in shared windows based app service plan, which was making it even worse for other applications too.

After analyzing the issue, we found that the issue was with the way we were downloading the files. In this post, we will see how we can download files from Azure Blob Storage efficiently using RecyclableMemoryStream.

The Problem

Let us first understand the problem. In this section, we will see how we were downloading the files from Azure Blob Storage. Below is the code snippet for the same.

1 |

|

Above code snippet is self explanatory but let me explain it in detail. We are using BlobServiceClient to get the list of blobs from the container. Then we are looping through the blob’s metadata and downloading the files one by one.

Let us benchmark the above code snippet using BenchmarkDotNet. Below is the code snippet for the same.

1 |

|

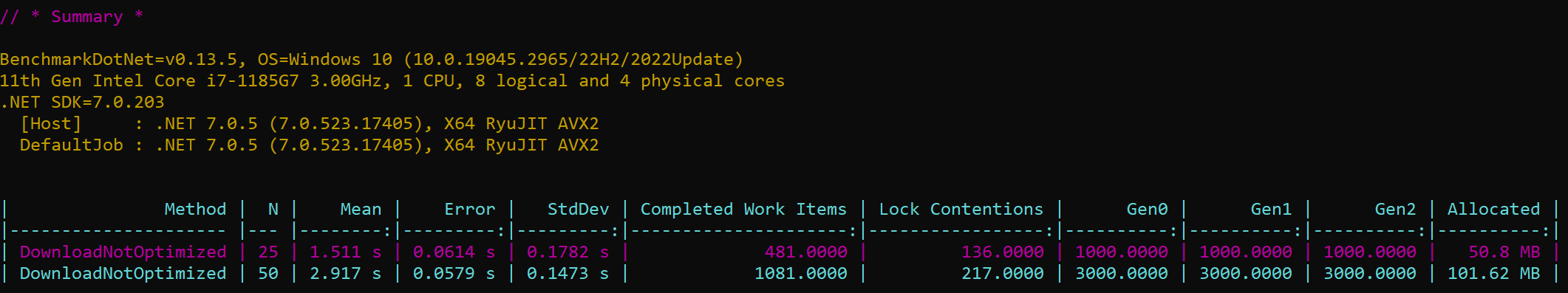

Below is the benchmark result for the same.

If you see the above benchmark result, you will notice that Gen 0, Gen 1 and Gen 2 all has some value and also the memory allocation looks high. But what exactly is Gen 0, Gen 1 and Gen 2?

What is Gen 0, Gen 1 and Gen 2?

I find the below explanation from Microsoft Docs LOH and Garbage Collection Fundamentals very helpful.

The .NET garbage collector (GC) divides objects up into small and large objects. The small object heap (SOH) is used to store objects that are smaller than 85 KB in size. The large object heap (LOH) is used to store objects that are equal or larger than 85 KB in size.

The garbage collector is a generational collector. It has three generations: Gen 0, Gen 1, and Gen 2.

Gen 0 is the youngest generation and contains short-lived objects. An example of a short-lived object is a temporary variable. Garbage collection occurs most frequently in this generation.

Gen 1 contains short-lived objects and longer-lived objects. Examples of longer-lived objects are objects in server applications that contain static data that is live for the duration of the process.

Gen 2 contains longer-lived objects and survives garbage collection cycles longer than Gen 0 and Gen 1 objects. Examples of Gen 2 objects are objects in server applications that contain static data that is live for the duration of the process and large objects (85 KB or larger).

In our case, we are downloading the files and storing it in MemoryStream. Since the files are larger than 85 KB, surely it will end up in LOH.

The Solution

So, from the above explanation, if we somehow can avoid LOH allocation, we might be able to reduce the memory consumption and performance will also improve. Microsoft has provided a library called Microsoft.IO.RecyclableMemoryStream which can be used to avoid LOH allocation. The excellent documentation for the same can be found here where you can find the details about how it actually works.

The best part is that the semantics are close to the original System.IO.MemoryStream implementation, and is intended to be a drop-in replacement as much as possible.

So, Let’s try to implement in our code -

1 |

|

Note that RecyclableMemoryStreamManager should be declared once and it will live for the entire process lifetime and it is thread safe. so, we can use it in multiple threads. let’s update the code to download the files in parallel instead of sequentially.

1 |

|

Here, SemaphoreSlim is used to limit the number of concurrent threads. In our case, we are limiting it to 10. You can change it as per your requirement.

Let’s update the benchmark code to use the optimized version.

1 |

|

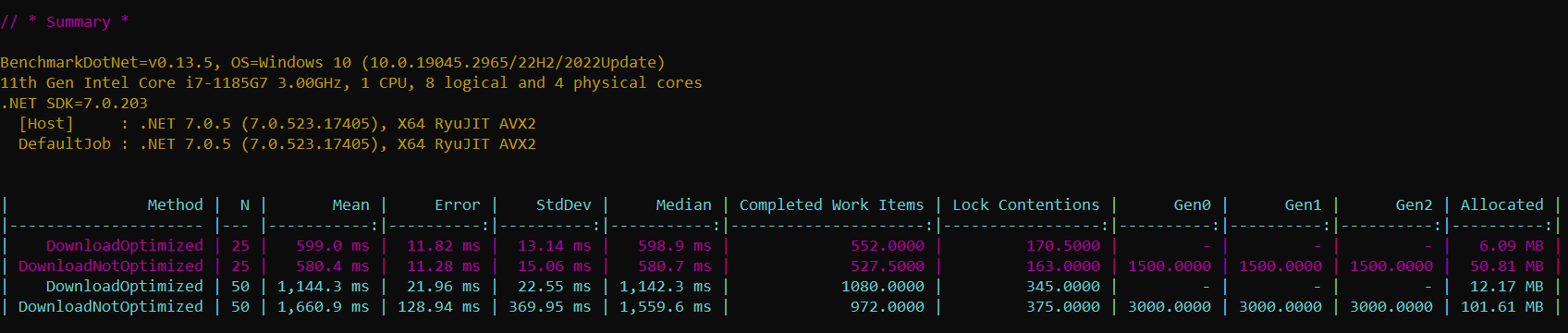

Below is the benchmark result for the same.

Conclusion

From the above result, we can clearly identify that there is a huge improvement in LOH allocation and becomes zero. This is possible because RecyclableMemoryStreamManager eliminate LOH allocations by using pooled buffers rather than pooling the streams themselves.

Also the memory allocation is reduced significantly. In fact, the memory allocation is reduced by ~734% which is huge.

You can find the source code here.